Blogger: Erik Smistad, PostDoc at Centre for Innovative Ultrasound Solutions (CIUS)

Neural networks have recently achieved incredible results for recognising objects such as cats, coffee cups, cars and plants in photographs. These methods are already used by companies like Facebook and Google to identify faces, recognize voice commands and even enable self-driving cars. At the Centre for Innovative Ultrasound Solutions (CIUS), we aim to use these methods to interpret ultrasound images. Ultrasound images can be challenging for humans to interpret and requires a lot of training. We believe neural networks can be used to make it easier to use ultrasound, interpret the images, extract quantitative information, and even help diagnose the patient. This may ultimately improve patient care, reduce complications and lower costs.

Artificial neural networks are simplified versions of the neural networks in the human brain. These networks are able to learn directly from a set of images. By showing a neural network many images of a cat, the network is able to learn how to recognise a cat.

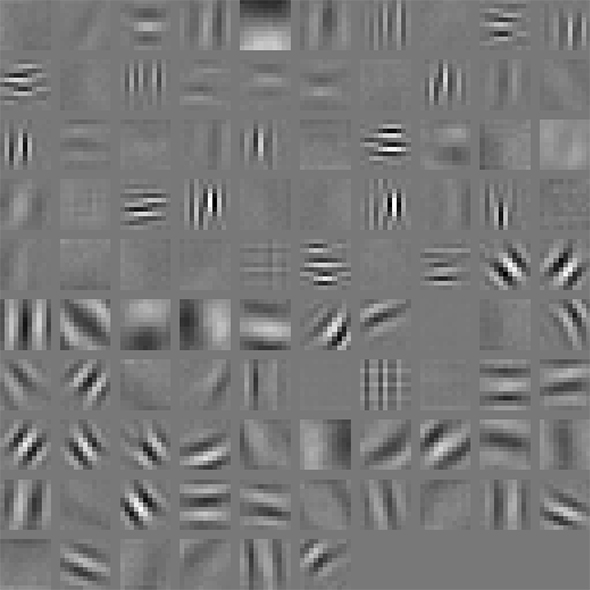

Neural networks are organized into several processing layers which learn different levels of image representation, from low-level representations such as edges, to high-level representations such as the shape of a cat. The image representations are used to distinguish one object from another. Over the years the trend has been to increase the number of layers and thus referred to as deep neural networks and deep learning.

Experiments have shown that deeper neural networks can learn to do even more advanced tasks. Recently, such a deep neural network beat the world champion in the game Go – a game more complex than chess.

One of the challenges with these methods is to collect enough data. Many images are required for the neural network to learn the anatomical variation present in the human population and how the objects appear in ultrasound images. Also, the data must be labelled by experts, which can be a time-consuming process.

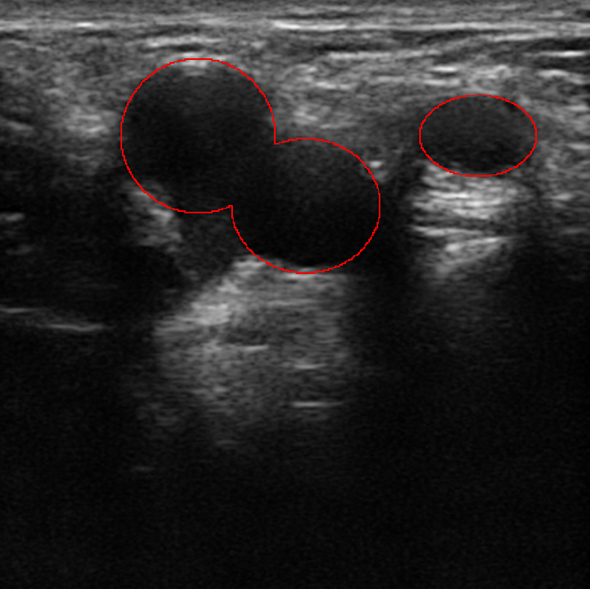

In a recent study, we created a neural network able to detect and highlight blood vessels in ultrasound images as shown in the figure below. This was done by training a neural network with over 10,000 examples of images with and without blood vessels. When the neural network is presented with fresh images it is able to recognise blood vessels – it has learnt what a blood vessels looks like in an ultrasound image.

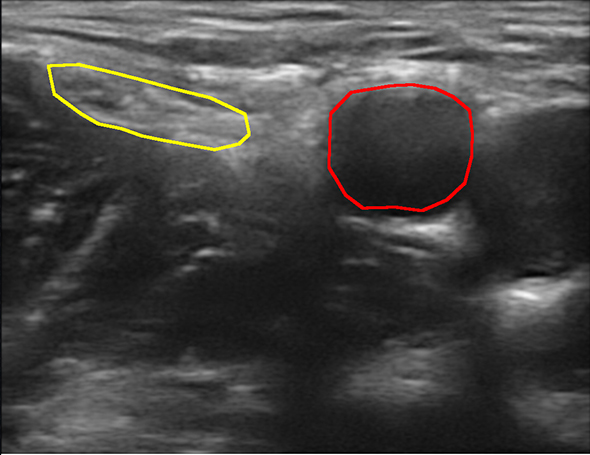

Currently, we are investigating how these neural networks can locate structures such as nerves in ultrasound images, which can be difficult even for humans. The figure below shows the femoral nerve of the thigh in yellow, located automatically by an artificial neural network. Identifying these nerves is crucial when performing ultrasound-guided regional anesthesia.

Erik Smistad is a PostDoc at CIUS working on machine learning and segmentation techniques for ultrasound image understanding. You can learn more about his research on his own website: Erik Smistad.